Our research aims at understanding how perceptual information is selectively processed by attention and represented in working memory. We are guided by the premise that the organization of perceptual representations constrains attention and working memory processes, and that each of these processes is influenced by current contextual information as well as prior knowledge. We are particularly interested in how diverse sensory inputs from different modalities are integrated to form coherent, multimodal representations.

SOME ONGOING RESEARCH QUESTIONS

How does hearing a sound influence visual processing at the same location?

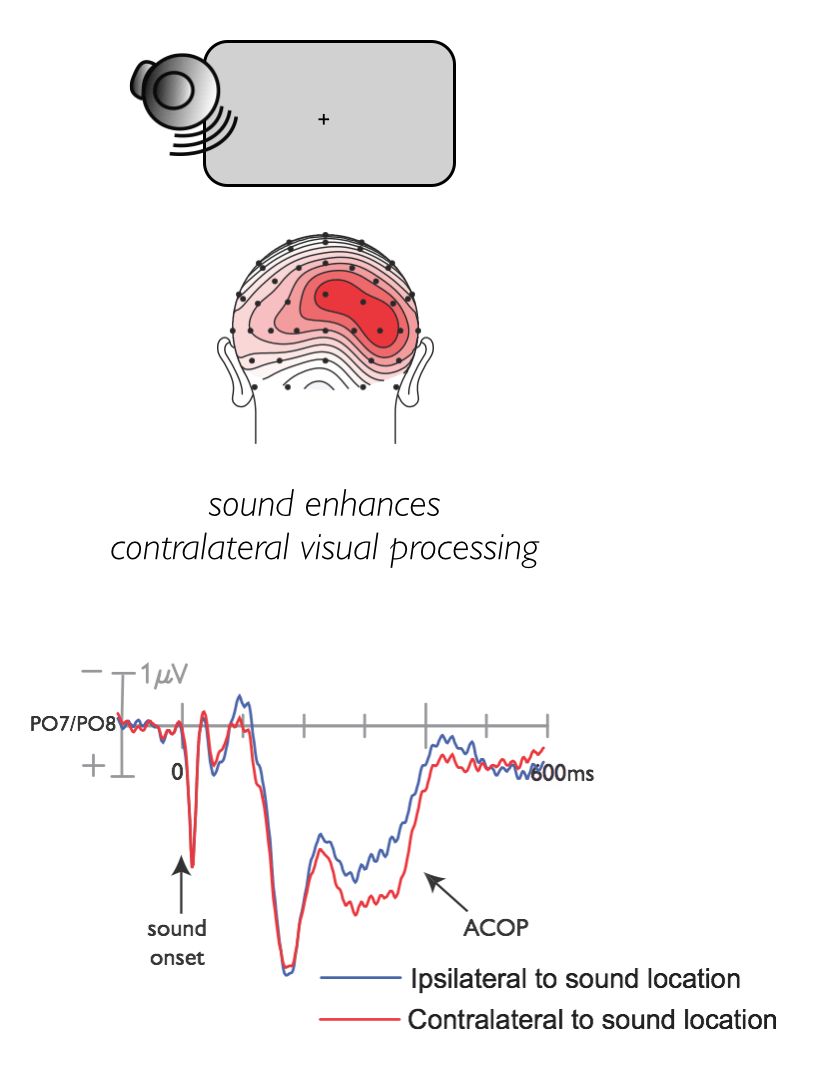

Hearing a sound can influence visual processing of a co-localized visual object: it can make it appear higher contrast, or make it appear earlier in time, than it is actually is. These sound-induced changes in visual perception are accompanied by modulations of the neural processing strength of the visual object in the early visual processing pathways.

What are the neural mechanisms of these cross-modal interactions?  We have found that a task-irrelevant, salient sound activates visual cortex automatically – even in the absence of any visual inputs. Interestingly, these sound-induced changes in visual-cortical processing are spatially specific and occur in the region of the visual cortex that represents that location. In other words, when hearing a sound on the left side of the visual field, processing in the right visual cortex is enhanced, and vice versa.

We have found that a task-irrelevant, salient sound activates visual cortex automatically – even in the absence of any visual inputs. Interestingly, these sound-induced changes in visual-cortical processing are spatially specific and occur in the region of the visual cortex that represents that location. In other words, when hearing a sound on the left side of the visual field, processing in the right visual cortex is enhanced, and vice versa.

This line of work shows that our senses are inextricably connected – and perceptual processing in one modality influences perceptual processing in another modality, at least for audition and vision.

Cross-modal cueing of attention alters appearance and early cortical processing of visual stimuli. Störmer, V.S., McDonald, J.J., & Hillyard, S.A. (2009). Proc Natl Acad Sci USA, 106, 22456-22461.

Salient sounds activate human visual cortex automatically. McDonald, J.J., Störmer, V.S., Martinez, A., Feng, W., & Hillyard, S.A. (2013). The Journal of Neuroscience, 33(21), 9194-9201.

Sounds activate visual cortex and improve visual discrimination. Feng, W., Störmer, V.S., Martinez, A., McDonald, J.J.,& Hillyard, S.A. (2014). The Journal of Neuroscience, 34(29), 9817-9824.

Cross-modal orienting of visual attention. Hillyard, S.A., Störmer, V.S., Feng, W., Martinez, A., & McDonald, J.J. (2015). Neuropsychologia. 83, 170-178.

Salient, irrelevant sounds reflexively induce alpha rhythm desynchronization in parallel with slow potential shifts in visual cortex. Störmer, V.S., Feng, W., Martinez, A., McDonald, J.J.,& Hillyard, S.A. (2016). Journal of Cognitive Neuroscience, 28(3), 433-445.

Orienting spatial attention to sounds enhances visual processing. Störmer, V.S. (2019). Current Opinion in Psychology, 29, 193-198.

Lateralized alpha activity and slow potential shifts over visual cortex track the time course of both endogenous and exogenous orienting of attention. Keefe, J.M. & Störmer, V.S. (2021). NeuroImage, 225, 117495.

What are the fundamental mechanisms of feature-based selection?

We can select a stimulus based on its location in the visual field, or based on its feature, for example its color. What are the mechanisms supporting effective selection based on visual features?

We have found that when attending to a particular color, for example red, processing of items in that color is enhanced throughout the visual field (global excitation), and at the same time processing of colors perceptually similar to the attended color is inhibited. Thus, feature-based selection elicits an excitatory peak at the attended feature with a narrow inhibitory surround in feature space to suppress potentially confusable stimuli during visual perception. Similarly, our research has shown that feature-based attention can be tuned broadly to entire feature ranges. Finally, in recent work we’ve explored how feature-based selection operates in highly competitive environments and have found that attention can act to increase target-distractor distance, which can result in perceptual warping across the entire feature space.

Our studies indicate that several processes involved in feature-based attention resemble what has been found for spatial attention. This, in our view, suggests that there may be a core set of computational principles across domains that can operate based on location or based on features, depending on the task and visual environment.

Feature-based attention elicits surround-suppression in feature space. Störmer, V.S., & Alvarez, G.A. (2014). Current Biology, 24(17), 1985-1988.

Feature-based attention warps the perception of visual features. Chapman, A.F., Chunharas, C. & Störmer, V.S. (2023). Scientific Reports, 13, 6487.

Efficient tuning of attention to narrow and broad ranges of task-relevant feature values. Chapman, A. F., & Störmer, V. S. (2023). Visual Cognition, 31:1, 63-84.

Feature similarity is non-linearly related to attentional selection: evidence from visual search and sustained attention tasks. Chapman, A.F., & Störmer, V.S. (2022). Journal of Vision, 22, 4.

Feature-based attention is not confined by object boundaries: spatially global enhancement of irrelevant features. Chapman, A.F. & Störmer, V.S. (2021). Psychonomic Bulletin & Review, 4, 1252-1260.

What are the limits of spatial selection?

Attentional selection is inherently limited and we can only select small subsets of objects at once. But why are there these limits?

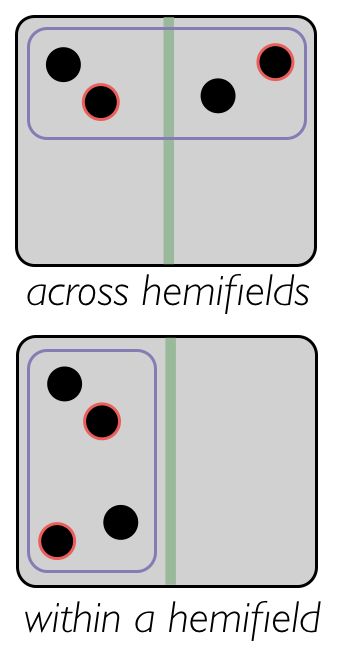

For effective stimulus selection, attention often modulates target representations at the earliest stages of processing – for example, in low-level visual cortex. However, low-level visual cortex is anatomically divided at the hemifield, with left visual cortex processing the right half-field, and the right visual cortex processing the left half-field. We found that attention effectively enhances target representations for objects presented in separate visual fields (left, right), but not when objects are presented in the same visual field (e.g., only left).

These results indicate that attention – a system usually considered high-level – is constrained by the neural architecture of the perceptual systems, in this case the anatomy of the visual cortex. Thus, attention limits appear not to be fixed – but rather depend on the nature and content of the neural representations over which it operates.

Within-hemifield competition in early visual areas limits the ability to track multiple objects with attention. Störmer, V.S., Alvarez, G.A., & Cavanagh, P. (2014). The Journal of Neuroscience, 34(35), 11526-11533.

Sustained multifocal attentional enhancement of stimulus processing in early visual areas predicts tracking performance. Störmer, V.S., Winther, G.N., Li, S.-C., & Andersen, S.K. (2013).The Journal of Neuroscience, 33(12), 5346-5351.

In what ways does attention alter our perception?

Attention is well-known to increase the speed and accuracy by which we process a stimulus. But does attention change the way we see an object?

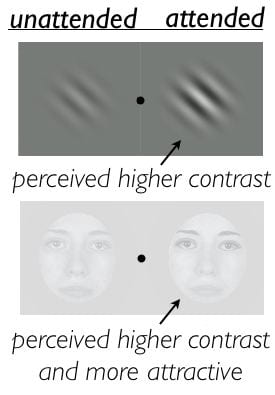

Involuntary (exogenous) cueing of attention can enhance the perceived contrast of a simple object (Gabor patch), by increasing the signal strength of that object at the location of the attentional cue in early visual cortex. More recently we explored whether attention can also increase the contrast of more complex, real-world stimuli like faces, and found that attention increases the perceived contrast of face parts, in particular the region of the eyes. Furthermore, attended faces were judged to be more attractive than unattended faces – possibly because higher contrast faces tend to be judged as more attractive.

These studies show that attention not only helps us by facilitating processing of an attended stimulus, but it fundamentally changes the way we perceive the world – for example, how attractive we find the people around us.

Cross-modal cueing of attention alters appearance and early cortical processing of visual stimuli. Störmer, V.S., McDonald, J.J., & Hillyard, S.A. (2009). Proc Natl Acad Sci USA, 106, 22456-22461.

Attention alters perceived attractiveness. Störmer, V.S., & Alvarez, G.A. (2016). Psychological Science., 27(4), 563-571.

How does attentional selection change in the course of healthy aging?

With advancing age, many cognitive abilities decline. The mechanisms underlying these age-related differences are yet not well understood.

In a series of studies, we investigated in what ways older adults differ from younger adult in

their ability to select multiple target objects from nontargt objects . Using EEG, we found that the age-related decline is – at least in part – driven by differences at early processing stages, namely during stimulation processing itself. We found these age-related deficits at early processing stages across different types of tasks, in particular an attentive tracking task and a visual-spatial working memory task.

Importantly, we also found that many of the older adults performed at the same level as many of the younger adults. This subgroup of high-performing older adults nonetheless showed differences in the underlying neural responses, suggesting that selection mechanisms change throughout the lifespan.

Normative shifts of cortical mechanisms of encoding contribute to adult age differences in visual-spatial working memory. Störmer, V.S., Li, S.-C., Heekeren, H.R., Lindenberger, U. (2013). NeuroImage, 73, 167-175.

Normal aging delays and compromises early multifocal visual attention during object tracking. Störmer, V.S., Li, S.-C., Heekeren, H.R., Lindenberger, U., (2013). Journal of Cognitive Neuroscience, 25(2), 188-202.

Feature-based interference from unattended visual field during attentional tracking in younger and older adults. Störmer, V.S., Li, S.-C., Heekeren, H.R., Lindenberger, U., (2011). Journal of Vision, 11, 1-12.

Dopaminergic and cholinergic modulation of working memory and attention: insights from genetic research and implications for cognitive aging. Störmer, V.S., Passow, S., Biesenack, J., Li, S.-C. (2012). Developmental Psychology, 48, 875-889.