Dartmouth has been using Canvas as an extension to F2F class in course materials delivery, quizzes administration and asynchronous discussion/communication. As such, we are particularly interested in learning how our students use Canvas to facilitate their final exam preparation.

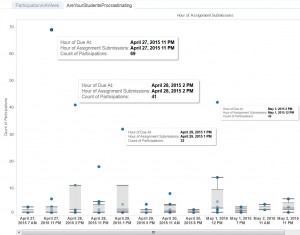

We gathered students’ page view activities data in Canvas during final exam period and students’ score on their final exams. Since each course deployed its own grading schema on the final exam, the final exam score was re-scaled by referencing the points possible assigned to the final exam. We examined number of page views made by individual students and the time they spent on each page, since the number of views and time spent are highly correlated, we decided to use one variable in in the further analysis.

The residual plot and QQ-plots suggest the simple linear regression assumption is not satisfied, thus we employed a natural logarithm transformation on the ID variable, and drew lowess line on the scatterplot and identified one point (87%) that might be of interest. In addition, outliers were identified and removed by referencing both leverage and Cook’s distance. Although the log transformation of predictor helped to satisfy the regression assumption, still only less than 1% of the variation could be explained by the model, thus we decided not to introduce the model, but to visualize the results in scatterplot instead.

- Overall, the range of students’ performance (points received/points possible) on their final exam covers from very low to very high, and quite a few students achieve fair performance on final exam without significant Canvas page view activities.

- In average, students, who received grades greater than 87% on final assignments/exams, tended to spend more time navigating through Canvas during final exam week.

- In contrast, the almost horizontal regression curve of students who got less than 88% on their final exam indicates that saturation is reached quickly where reviewing materials during final exam week does not correlated with better performance on final exam. In other words,

- Navigating through Canvas produces marginal effect on final exam preparation of students, who performed at B- level or lower (grades less than 88%)

The analysis is derived from Canvas page view activities of students who use Canvas as an extension of F2F learning. The data suggest that many students used Canvas to review course materials during final exam week, but we have very limited data shows their study activities beyond Canvas and classroom, while students’ performance on final exam can be significantly affected by their study activities conducted offline and outside classroom. Therefore, it is instrumental to identify new learning behavior taken place in a variety of digital platforms when we try to understand how our students learn.