A refurbishment to CERN’s Large Hadron Collider (LHC) is near completion. The revamped LHC will approach its design energy of seven trillion electron volts per beam—an amount equivalent to the total energy in a speeding freight train (1).

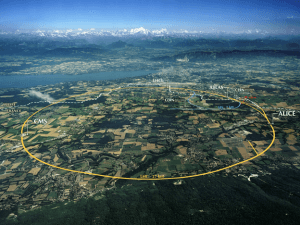

An aerial shot of the European Organization for Nuclear Research (CERN). It is based in the northwest suburbs of Geneva on the Franco-Swiss border, and operates the Large Hadron Collider, the world’s most powerful particle collider.

The last time that researchers ran the LHC at high energies, the LHC short circuited, knocking it out of commission for over a year and resulting in tens of millions of dollars of damage. Though the LHC was functional by the end of 2009, it has only been run at half of its design energy since the last short circuit to avoid another electrical fault (1).

Even the lower energy was enough for the beam collisions to produce evidence of the existence of the Higgs boson, the last unconfirmed prediction of the 40-year-old standard model in particle physics that is capable of describing the behavior of every known particle and force except for gravity. Researchers hope that the higher energy collisions that result from running the LHC at its design energy will advance the understanding of the physical world even further (1).

One question that researchers hope to address through high energy collisions with the LHC is whether the Higgs particle is the only one of its kind, or if it is the lightest in a family of particles. There is also hope that other new particles might appear at higher collision energies. Discoveries of this sort would push beyond the current standard model of particle physics.

One of the biggest challenges associated with the refurbished LHC is not related to the machine itself, but to the processing of the data that it will output. Currently, the data flows to the CERN computing center, a room that houses 100,000 processors and enough fans to cool them. These processors analyze the data and store the results on magnetic tape (1).

According to Jeremy Coles, a physicist at Cambridge, the “sky-high” rates of data to come will pose a major challenge for the future (1). During its first run, the LHC produced 15 petabytes of data in a year, more than the amount of data uploaded to YouTube annually. The revamped LHC is projected to produce twice that amount, which would amount to an average of one gigabyte every second (1).

Coles is confident that the computing network will be able to handle that rate increase, partly due to advances in networking. However, further upgrades to the LHC are anticipated that will allow for the transmission of over 110 petabytes of data annually by early 2020, and eventually as much as 400 petabytes a year.

To add to the difficulty of processing data from the LHC, new computer chips are advancing by increasing the number of processors in them, as opposed to increasing their speeds. This advance is not useful for processing the LHC data because the code that runs the analysis was written to run on one processor. To use many processors, the code would have to be rewritten using parallel programming, which would involve rewriting more than 15 million lines of code developed over many years by thousands of people (1).

Nonetheless, technology can advance in unforeseen ways. When CERN researchers needed superior information sharing methods in the 1980s, the internet arrived. When they needed better access to computer resources in the 1990s, they created the world’s largest computing network. Perhaps they will overcome this challenge as they have overcome others in the past (1).

Sources:

1. Chalmers, M. (2014, October 8). Large Hadron Collider: the Big Reboot. Nature.com. Retrieved October 11, 2014, from http://www.nature.com/