Frankie Carr ‘22, Biological Sciences, Winter 2021

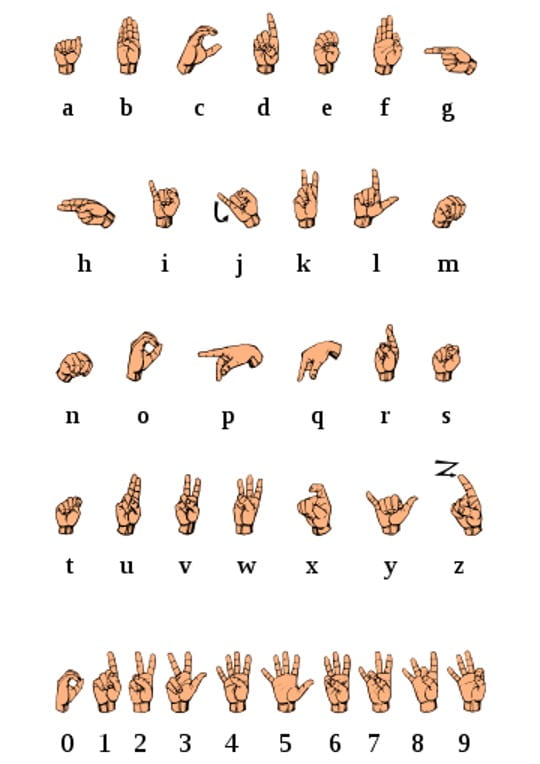

Figure: The English alphabet and numbers 1-9 as signed in American Sign Language (ASL) (source: Wikimedia Commons).

Most think of the spoken, verbal communication between people when considering language. However, they would really only be considering one aspect of language, as language is much more than just an auditory phenomenon. Aside from so-called “non-verbal communication,” Sign Languages are “spoken” entirely without auditory cues. Despite the absence of such cues, researchers at the Max Planck Institute performed a meta-analysis of studies on the brain’s processing of language in Sign Languages and Spoken Languages and were able to confirm that similar parts of the brain are involved in the understanding and production of both forms of communication (Trettenbrein et al., 2021).

Processing of Spoken Languages has been shown to be primarily done in the left hemisphere of the brains of most individuals, especially in regions such as Broca’s Area (used for language processing), the Angular Gyrus (used for semantic processing and comparison of auditory information to stored information), and Wernicke’s Area (used for speech production). Of these language areas, Broca’s Area is the main location used for the production of spoken language; in fact, damage to Broca’s Area can result in loss of the ability to produce coherent speech (a condition called Broca’s Aphasia). It has been theorized that this lateralization of language for those who use spoken languages is a result of the left side of the brain having become more specialized, via natural selection, for temporal processing (i.e., processing stimuli that are changing over time, like the sequential stringing together of different sounds into words and then words into sentences like is seen in Spoken Languages) (Schönwiesner et al., 2005).

Those who communicate primarily via Sign Language due to deafness showed activation of similar brain areas during the processing of information being communicated through the use of signs. Researchers were able to demonstrate that Broca’s Area in the left hemisphere is just as active in brains of those using Sign Language as those who can hear and are able to speak. In doing so, the authors argue that Broca’s Area and other parts of the Inferior Frontal Gyrus serve as a hub for processing and producing language, regardless of the form of the language (Trettenbrein et al., 2021).

Furthermore, the homolog of Broca’s Area in the right hemisphere appears to be just as active in Sign Language as the left hemispheric region. This area is relatively inactive in the processing of auditory information but seems to be used to interpret nonverbal cues that accompany speech, such as posture and bodily movements. Its recruitment for Sign Languages is likely as a result of the nonauditory nature of these languages being more dependent on the movements and actions of the signer. It was posited that the inclusion of more right hemispheric areas in speech processing of Sign Language communicators is a result of the right hemisphere being used more for simultaneous processing of stimuli from multiple locations. Unlike the sequential processing required for Spoken Languages, in understanding the exact meaning of the signer’s communication, the recipient must simultaneously process the information being presented by the signer’s hands, body, and face, leading to recruitment of the right hemisphere (Trettenbrein et al., 2021).

Although the authors did not connect their conclusion about the universality of Broca’s Area to the Language Acquisition Device posited by Chomsky, it may serve as great evidence for it. The linguist, Noam Chomsky, was among the first to argue the Nativist Theory of Language: language is an intrinsic property of humans and that we possess the ability to learn language as an innate skill. In doing so, he argued that the ability to learn languages, called the Language Acquisition Device, was innate to all humans and thus we all communicate through a Universal Grammar (Chomsky, 1965). While there is mixed support for this theory, there is some tangible evidence to support it. This includes the fact that infants who are incapable of producing Spoken Language or who are not exposed to Spoken Language will “babble” like their verbal counterparts. They will do this babbling with their hands, mimicking the process of producing nonsensical speech but doing so through the modality of Sign Language (Petitto and Maraentette, 1991). Based on this and the research done by Trettenbrein and colleagues, it is very possible that Broca’s Area may be one of the regions of the brain used for this innate ability to acquire language (i.e., it may be a part of the Language Acquisition Device). Further research into how Broca’s Area acts during the learning of a language and in infantile babbling could help validate this theory.

References:

Chomsky, N. (1965). Aspects of the theory of syntax. M.I.T. Press.

Max Planck Institute for Human Cognitive and Brain Sciences. (2021, February 19). How the brain processes sign language. ScienceDaily. www.sciencedaily.com/releases/2021/02/210219124236.htm

Petitto, L.; Marentette, P. (1991). “Babbling in the manual mode: evidence for the ontogeny of language” (PDF). Science. 251 (5000): 1493–1496. doi:10.1126/science.2006424. ISSN 0036-8075. PMID 2006424.

Schönwiesner, M., Rübsamen, R. and Von Cramon, D.Y. (2005), Hemispheric asymmetry for spectral and temporal processing in the human antero‐lateral auditory belt cortex. European Journal of Neuroscience, 22: 1521-1528. https://doi.org/10.1111/j.1460-9568.2005.04315.x

Trettenbrein, PC, Papitto, G, Friederici, AD, Zaccarella, E. Functional neuroanatomy of language without speech: An ALE meta‐analysis of sign language. Hum Brain Mapp. 2021; 42: 699– 712. https://doi.org/10.1002/hbm.25254

Leave a Reply